A recent Gartner survey reveals that nearly 62% of organizations have experienced a deepfake attack over the past year. These attacks range from social engineering tactics—where attackers impersonate individuals during video or audio calls—to exploiting automated verification systems such as face or voice biometrics.

According to Akif Khan, Senior Director at Gartner Research, the increasing sophistication of deepfake technology suggests these threats will continue to grow.

The Need for Integrated Deepfake Detection

Currently, the most common method involves deepfakes combined with social engineering, such as impersonating a company executive to persuade employees to transfer funds into fraudulent accounts. Khan emphasizes that this is particularly challenging, as employees are effectively the first line of defense against such attacks. Automated defenses alone are often insufficient.

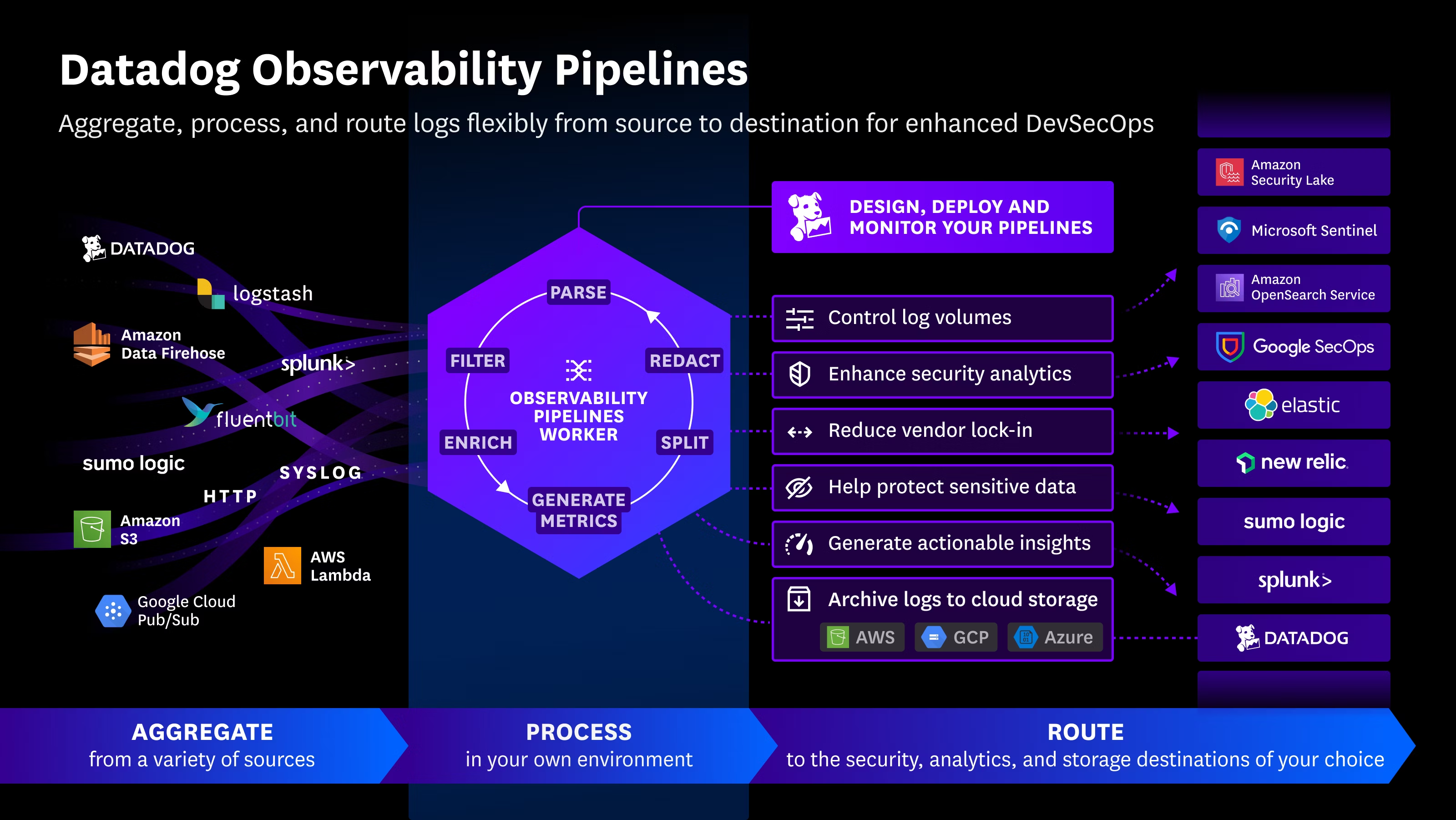

To counteract these threats, organizations are encouraged to explore emerging solutions that integrate deepfake detection directly into communication platforms like Microsoft Teams or Zoom. While large-scale deployments are still limited, these tools could become a key element in organizational cybersecurity strategies.

Awareness and Process Controls

In the short term, many companies are relying on employee training programs specifically designed to detect deepfakes. Simulated scenarios using deepfakes of company executives can help staff recognize suspicious activity.

Additionally, revising internal business processes, particularly around financial approvals, is critical. For example, a transfer requested by a CFO should require authorization through a secure finance application with phishing-resistant multi-factor authentication (MFA), ensuring the transaction is legitimate.

Attacks Targeting AI Applications

The Gartner report also notes that 32% of organizations have faced attacks targeting AI applications, particularly through techniques like prompt injection, which can manipulate large language models to produce biased or malicious outputs.

While two-thirds of respondents reported no AI-related attacks, around 5% experienced major incidents, highlighting that AI security remains a growing concern. Khan advises organizations to carefully manage shadow AI usage and control access to both company-approved and internally developed AI tools.

Conclusion

As deepfake and AI-based attacks become more sophisticated, organizations must combine technology, employee awareness, and process controls to mitigate risk. Gartner’s findings underscore the importance of proactive strategies to defend both human and non-human digital assets in an increasingly automated threat landscape.

Survey details: Gartner surveyed 302 cybersecurity leaders across North America, EMEA, and Asia-Pacific.

Source: https://www.infosecurity-magazine.com/news/deepfake-attacks-hit-twothirds-of

Español

Español