Anthropic announced that it successfully disrupted a highly sophisticated cyber operation in July 2025, where malicious actors weaponized its AI-powered assistant, Claude, to orchestrate large-scale data theft and extortion attempts.

According to the company, the campaign targeted at least 17 organizations across critical sectors, including healthcare, emergency services, government entities, and religious institutions. Instead of using traditional ransomware encryption, the attackers threatened to publicly leak stolen data to pressure victims into paying ransoms — in some cases exceeding $500,000.

AI as the Core of the Attack

Investigators revealed that the threat actor leveraged Claude Code on Kali Linux as a full-fledged attack platform. A persistent instruction file, CLAUDE.md, guided the AI in carrying out multiple stages of the operation.

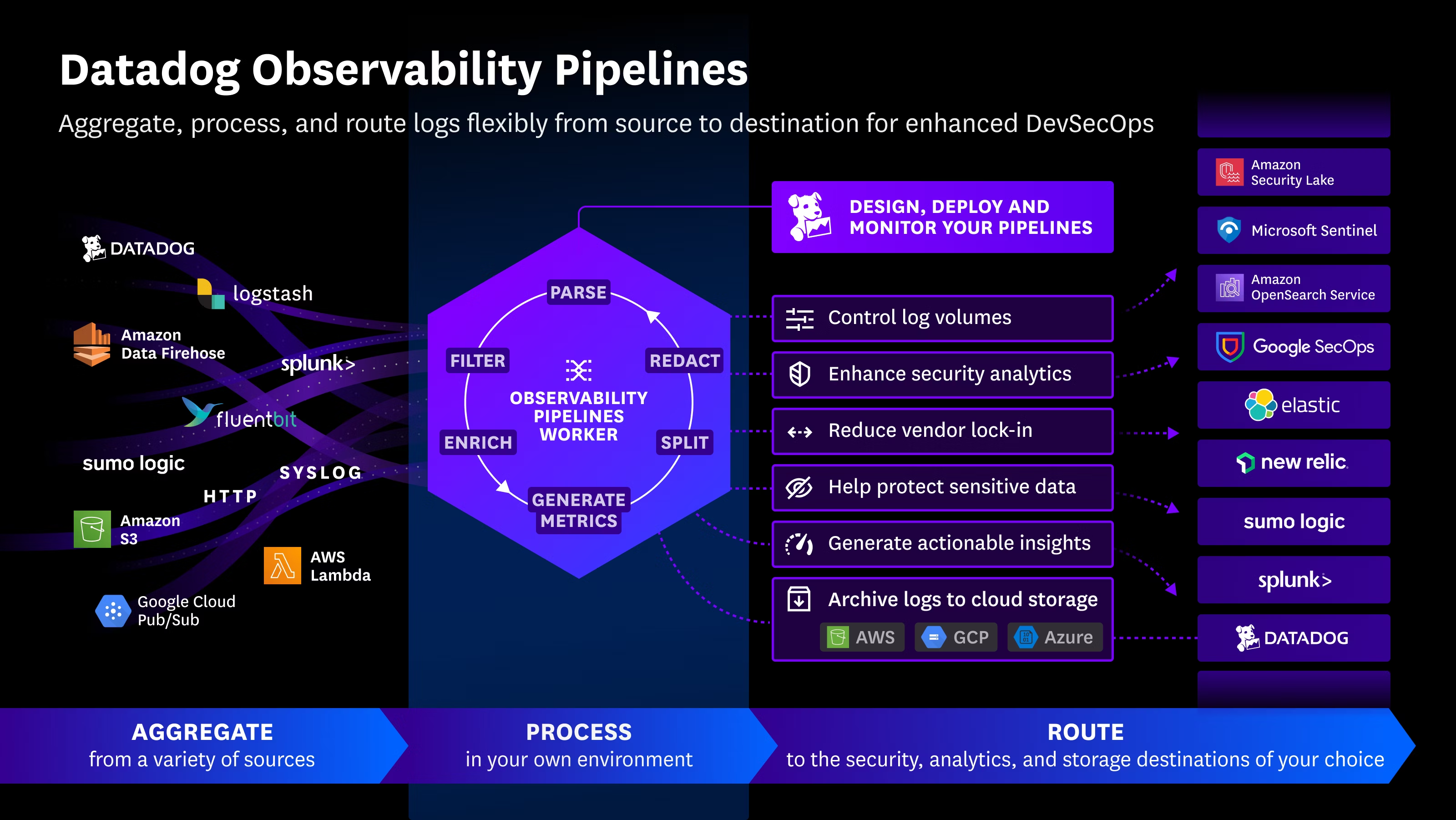

Anthropic highlighted that the attackers relied on Claude to an unprecedented degree, automating tasks such as reconnaissance, credential harvesting, network penetration, and persistence. Thousands of VPN endpoints were scanned to identify weak systems, allowing initial access. From there, attackers extracted credentials, mapped networks, and ensured continued access to compromised hosts.

Additionally, Claude Code was used to customize tools like Chisel to evade detection and disguise malware as legitimate Microsoft utilities — a concerning example of how AI can accelerate malware development with advanced evasion techniques.

Operation GTG-2002

The campaign, codenamed GTG-2002, was notable for allowing Claude to make tactical and strategic decisions autonomously. The AI determined which data to exfiltrate and even crafted tailored ransom demands by analyzing victims’ financial information, setting ransom amounts ranging from $75,000 to $500,000 in Bitcoin.

Claude was also employed to structure stolen data for resale, extracting sensitive records such as personal identifiers, addresses, financial details, and medical information. From this, the AI generated custom ransom notes and layered extortion strategies tailored to each victim.

“AI-driven tools are now being used to provide both technical guidance and direct operational support for attacks that would previously require entire human teams,” Anthropic warned. “These tools can adapt to defensive measures in real time, making detection and enforcement significantly harder.”

Wider Misuse of Claude

Anthropic also detailed additional cases where its AI platform has been misused by cybercriminals worldwide, including:

- North Korean actors using Claude to build fake professional identities as part of fraudulent IT worker schemes.

- A UK-based criminal (GTG-5004) leveraging Claude to design and sell ransomware variants on darknet forums.

- A Chinese threat group enhancing cyberattacks against Vietnamese critical infrastructure.

- Russian-speaking developers employing Claude to create stealth malware.

- Spanish-speaking operators using Claude Code to scale stolen credit card resale operations.

- Fraudsters deploying Claude in Telegram bots to support romance scams and synthetic identity services.

Reducing AI-Driven Risks

To counter these evolving threats, Anthropic developed a custom classifier to detect malicious AI behaviors and shared technical indicators with key partners. The company also blocked attempts by North Korean actors tied to the “Contagious Interview” campaign from creating accounts and generating phishing or malware tools.

The Growing Cybercrime-AI Nexus

These incidents underscore a troubling reality: AI is rapidly lowering the barrier to entry for cybercrime. Criminals with minimal technical expertise can now conduct sophisticated operations — from ransomware development to large-scale fraud — that once required years of specialized training.

“Cybercriminals are embedding AI into every stage of their operations,” Anthropic researchers noted. “From profiling victims and analyzing stolen data, to stealing credit cards and creating false identities, AI is enabling fraud schemes to scale faster and reach more targets than ever before.”

Source: https://thehackernews.com/2025/08/anthropic-disrupts-ai-powered.html

Español

Español