More than half of today’s spam and malicious emails are now being generated by artificial intelligence, according to new findings from cybersecurity firm Barracuda, in collaboration with Columbia University and the University of Chicago.

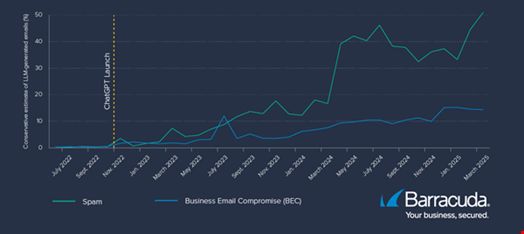

The team analyzed millions of spam messages intercepted by Barracuda between February 2022 and April 2025. By applying specialized detection tools, they were able to determine whether the content had been created by AI systems.

The data revealed a consistent rise in AI-generated spam beginning in late 2022—coinciding with the launch of ChatGPT, the first widely accessible large language model (LLM). The proportion of AI-generated messages spiked dramatically in March 2024 and reached 51% in April 2025.

Speaking to Infosecurity Magazine, Columbia University Associate Professor Asaf Cidon said the exact cause of this jump is still uncertain. He speculated that it may be linked to the release of newer AI models or shifts in attacker strategies that favor machine-generated content.

The researchers also examined AI’s role in business email compromise (BEC) schemes—targeted fraud attempts often impersonating executives to request unauthorized financial transfers. Here, AI-generated content accounted for 14% of cases in April 2025, showing slower adoption likely due to the nuanced nature of these scams.

However, Cidon anticipates a rise in AI use within BEC attacks, particularly as voice cloning technologies mature.

“We expect to see deepfake audio used to convincingly mimic executives like CEOs in future scams,” he warned.

Why Attackers Use AI

The study identified two main reasons why cybercriminals are adopting AI: to bypass email security filters and to make their messages seem more authentic. Compared to human-written phishing emails, those created with AI were generally more polished—featuring better grammar, formal tone, and sophisticated language.

This is especially effective when attackers are targeting English-speaking recipients but are not native speakers themselves. The report noted that the majority of targeted users were based in English-dominant countries.

Interestingly, attackers were seen using AI much like marketers use A/B testing—experimenting with slight changes in wording to see which variations evade detection more successfully.

One common element in phishing emails—creating a false sense of urgency—remained consistent between human- and AI-written messages. This suggests AI isn’t changing attackers’ tactics, but is making those tactics more effective.

As cybercriminals continue to refine their use of AI, experts warn that email security solutions must evolve just as quickly to counter these sophisticated threats.

https://www.infosecurity-magazine.com/news/ai-generates-spam-malicious-emails/

Español

Español