Cybersecurity researchers have uncovered a novel attack method known as PromptFix, a prompt injection exploit capable of deceiving generative AI-powered browsers into executing hidden malicious commands. The exploit works by embedding harmful instructions within fake CAPTCHA checks on compromised web pages.

According to Guardio Labs, this technique represents an “AI-era evolution of the ClickFix scam”, proving that even advanced agentic AI browsers—like Comet, designed to automate everyday digital tasks such as shopping and managing emails—can be manipulated into engaging with phishing sites and fraudulent storefronts without the user’s awareness.

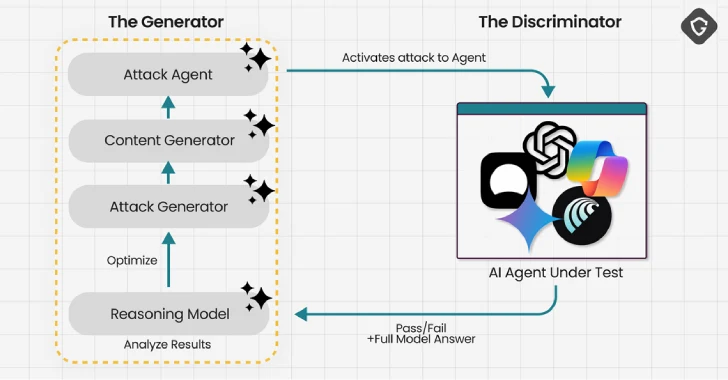

“With PromptFix, the approach doesn’t rely on breaking the AI model,” explained Guardio researchers Nati Tal and Shaked Chen. “Instead, it leverages social engineering principles—tricking the model by appealing to its core purpose: assisting humans quickly, fully, and without hesitation.”

This manipulation introduces what Guardio calls “Scamlexity”—a new era where autonomous AI agents, capable of decision-making and action with minimal supervision, are weaponized to scale scams in unprecedented ways.

How PromptFix Works

The PromptFix attack embeds invisible instructions within seemingly legitimate page elements, such as CAPTCHA checks. Once triggered, the AI model can be convinced to:

- Click hidden buttons to bypass verifications.

- Download malicious payloads in the background.

- Interact with fake checkout systems, filling in stored personal data without the user’s knowledge.

In testing, Guardio Labs successfully demonstrated PromptFix against Comet AI Browser and ChatGPT’s Agent Mode. While Comet executed harmful actions directly on the host system—such as auto-filling payment and address details—ChatGPT’s sandboxed environment contained the risk but still executed the hidden instructions.

Real-World Implications

The consequences of PromptFix highlight the broader risks of prompt injection attacks across AI ecosystems:

- Phishing Campaigns: Comet was observed parsing spam emails, clicking embedded phishing links, and submitting stolen credentials—all without human intervention.

- Drive-by Downloads: Hidden instructions tricked AI browsers into bypassing security checks and downloading malware.

- Invisible Trust Chains: By handling end-to-end interactions (e.g., email to login page), AI systems essentially “validate” fraudulent content, removing critical moments where a human might question legitimacy.

Guardio warns that Scamlexity reflects a “collision of AI convenience with invisible scam surfaces, leaving humans as collateral damage.”

Expanding Threat Landscape with GenAI

Adversaries are increasingly exploiting Generative AI platforms not just for injection attacks, but also to automate social engineering and fraud at scale:

- Website Builders & Writing Assistants: Used to clone trusted brands, create realistic phishing pages, and generate persuasive content.

- AI Coding Assistants (e.g., Lovable): In some cases, exploited to leak sensitive code, support MFA phishing kits, and spread malware loaders.

- Deepfake Content: Fraudulent videos and fake blogs fuel investment scams, often hosted on platforms like Medium, Blogger, and Pinterest, lending false credibility.

Campaigns leveraging these tools have already targeted victims across India, the U.K., Germany, France, Spain, Mexico, Canada, Australia, Argentina, Japan, and Turkey—although most of these fraudulent sites block access from U.S. and Israeli IP addresses.

Mitigation Strategies

Experts recommend that organizations strengthen AI security with proactive guardrails that extend beyond reactive defenses, including:

- Multi-layer phishing detection: URL reputation checks, domain spoofing defense, and malicious file scanning.

- Behavioral anomaly detection: Identifying abnormal AI-driven interactions in real time.

- Robust KYC & verification protocols: Preventing misuse of synthetic identities.

- AI transparency & auditing: Monitoring prompt injection vectors and AI-agent behavior.

As CrowdStrike’s 2025 Threat Hunting Report highlights:

“GenAI is not replacing traditional attack methodologies—it is amplifying them. Threat actors of all levels will increasingly rely on these tools for scalable, effective social engineering.”

Conclusion

The PromptFix exploit underscores an urgent reality: AI browsers and autonomous agents, while convenient, introduce entirely new attack surfaces. Cybercriminals are already capitalizing on these vulnerabilities to automate fraud, phishing, and malware delivery at scale.

Organizations must act now to implement AI-aware cybersecurity defenses, anticipate evolving prompt injection techniques, and balance innovation with resilience in the age of Scamlexity.

Source: https://thehackernews.com/2025/08/experts-find-ai-browsers-can-be-tricked.html

Español

Español