In a major milestone for artificial intelligence in cybersecurity, Google has announced that its AI-based vulnerability research system, Big Sleep, has discovered and reported its first 20 security flaws across a range of widely used open-source projects.

The news came from Heather Adkins, Google’s VP of Security, who confirmed that these findings primarily affect popular open-source libraries such as the multimedia toolkit FFmpeg and the image-processing suite ImageMagick. Big Sleep is the product of a collaboration between DeepMind—Google’s advanced AI research division—and Project Zero, the company’s elite team of security researchers known for uncovering critical software vulnerabilities.

AI-Driven Discovery, Human-Assured Accuracy

While details on the specific vulnerabilities remain under wraps until proper fixes are released, the development highlights a pivotal step forward: AI agents are now actively contributing to real-world vulnerability discovery. According to Google spokesperson Kimberly Samra, each bug identified by Big Sleep was found and reproduced independently by the AI, although a human expert reviewed the results before they were formally reported—ensuring accuracy and reliability.

This hybrid model of automation with human oversight helps strike a balance between innovation and responsibility, especially in an area as sensitive as security vulnerability disclosure.

A Glimpse Into the Future of Security Research

Google’s Royal Hansen, VP of Engineering, described the breakthrough as “a new frontier in automated vulnerability discovery.” The rise of Large Language Model (LLM)-powered security tools such as Big Sleep, RunSybil, and XBOW suggests a growing ecosystem of AI agents capable of identifying flaws that might be missed by traditional tools or overburdened security teams.

XBOW recently made headlines for topping one of the U.S. leaderboards on HackerOne, a leading bug bounty platform. However, it’s important to note that most of these AI systems—like Big Sleep—still involve human validation at some point in the process to ensure legitimate findings.

Promise Meets Skepticism

While the success of Big Sleep showcases the potential of AI in security, the journey is not without its hurdles. Several maintainers of open-source software have raised concerns about false positives generated by AI tools—some even referring to them as “bug bounty noise” or “AI slop.” These phantom vulnerabilities, which result from AI hallucinations, waste valuable time and resources for already stretched development teams.

Vlad Ionescu, CTO and co-founder of RunSybil, emphasized that Big Sleep is a serious contender in this space, crediting the project’s solid foundation: “Good design, a team that knows what they’re doing, Project Zero’s experience in bug hunting, and DeepMind’s powerful AI infrastructure.”

The Road Ahead

Despite the challenges, the emergence of tools like Big Sleep signals a fundamental shift in how the security community approaches vulnerability discovery. As these systems mature, we may see AI play an increasingly prominent role in keeping open-source and proprietary software more secure—accelerating threat detection without compromising quality.

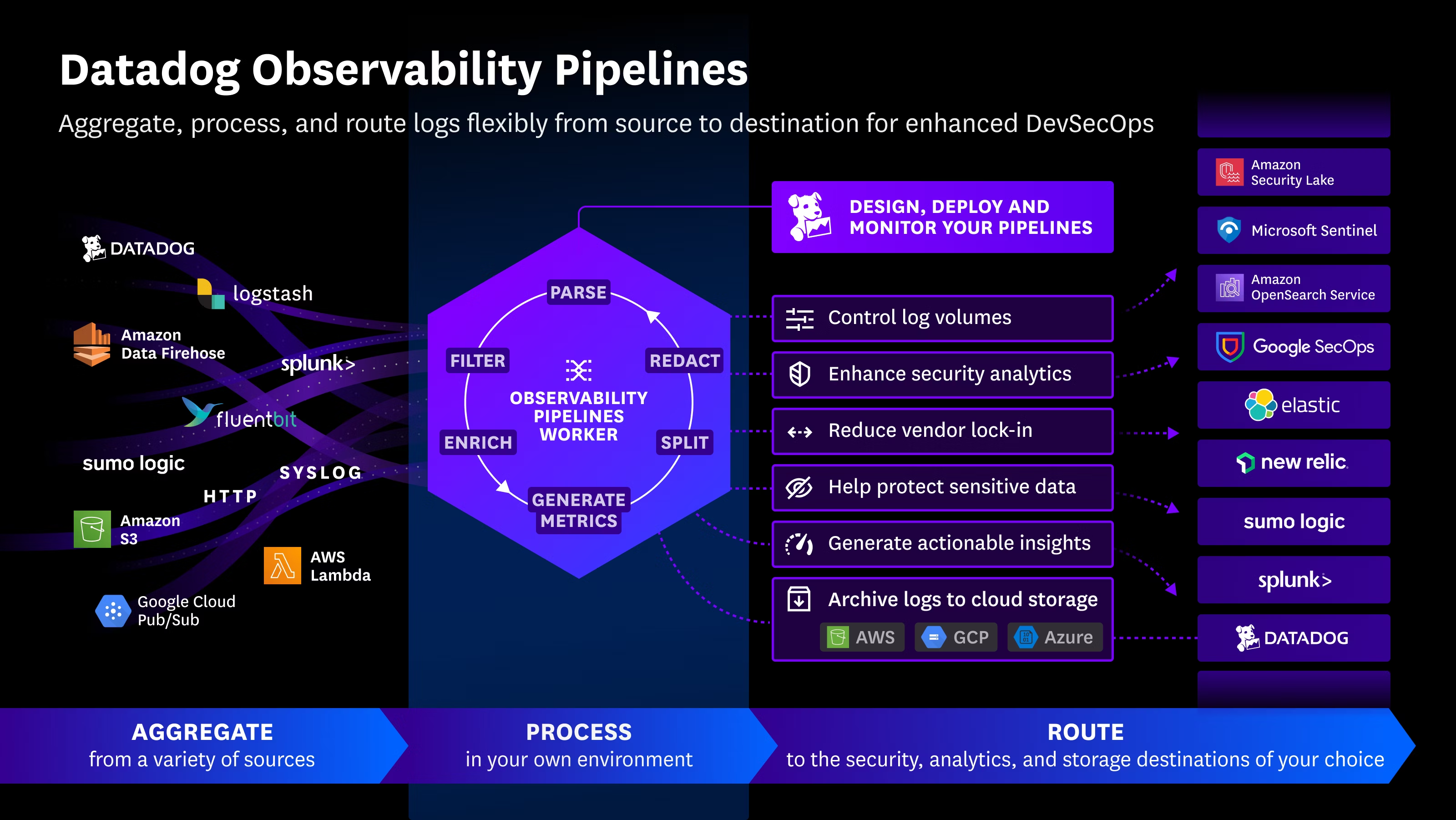

For organizations in cybersecurity, observability, and compliance, it’s time to start thinking about how to integrate AI-driven vulnerability scanning into existing DevSecOps pipelines—not as a replacement for human expertise, but as a force multiplier.

Español

Español