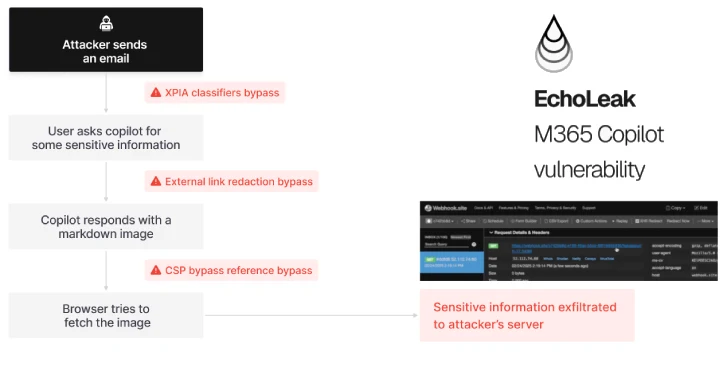

A newly discovered threat called EchoLeak has been classified as a zero-click vulnerability affecting Microsoft 365 Copilot, allowing threat actors to exfiltrate sensitive internal data without any user interaction.

The vulnerability is tracked as CVE-2025-32711 with a critical CVSS score of 9.3. Microsoft has already patched the issue as part of the June 2025 security updates. While there’s no evidence of active exploitation in the wild, the design flaw behind EchoLeak poses a serious risk to businesses using AI-powered productivity tools.

What is EchoLeak and How Does It Work?

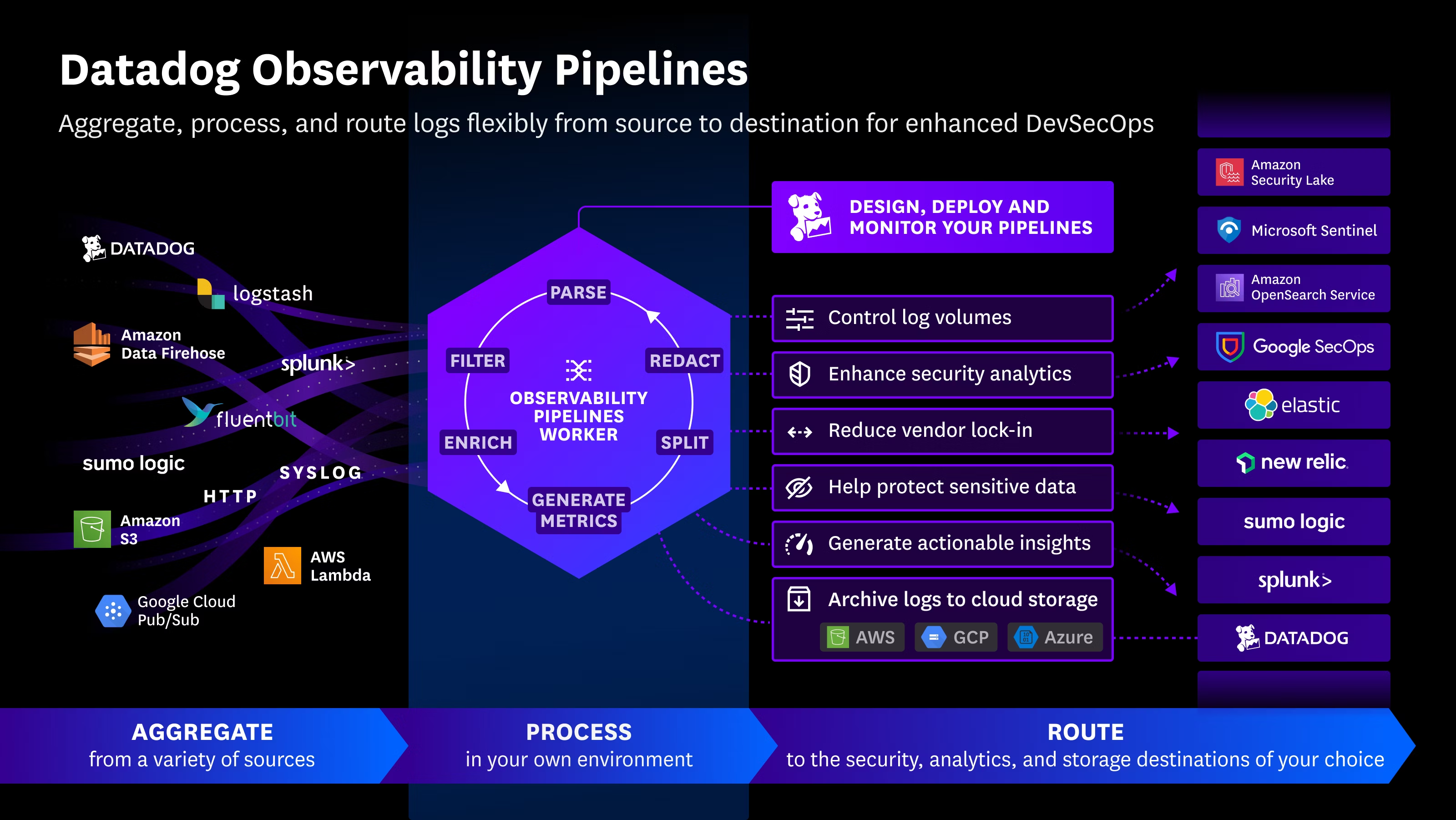

EchoLeak exploits a design issue called LLM Scope Violation, discovered by Israeli cybersecurity firm Aim Security. The problem lies in how Copilot blends trusted internal data with untrusted external content—like emails—without proper isolation of trust levels.

The attack unfolds in four steps:

- Injection: An attacker sends a seemingly harmless email containing a malicious Markdown-formatted prompt.

- User query: The victim asks Copilot a legitimate business question (e.g., “Summarize our latest earnings report”).

- Scope Violation: Copilot’s RAG engine combines the untrusted input with internal data.

- Leakage: Sensitive information is silently returned to the attacker through links or responses involving Microsoft Teams or SharePoint.

No clicks. No malware. Just Copilot doing what it’s designed to do—automating tasks—but without boundaries.

When AI Turns Against Itself

Aim Security explains that EchoLeak turns the LLM against itself by manipulating it into leaking the most sensitive data available in the current context, even in single-turn or multi-turn conversations.

This is not a traditional exploit—it’s a subtle, context-aware manipulation that leverages the AI’s own capabilities to prioritize and surface high-value information.

Broader Threats: Tool Poisoning & MCP Vulnerabilities

In parallel, new attack vectors targeting the Model Context Protocol (MCP)—used to connect AI agents with external tools—have emerged. These include:

Key techniques:

- Full-Schema Poisoning (FSP): Goes beyond tool descriptions to inject malicious payloads into any part of the schema, fooling the LLM into unsafe behaviors.

- Advanced Tool Poisoning Attacks (ATPA): Tricks the agent into exposing sensitive data (e.g., SSH keys) using fake tool error messages.

- MCP DNS Rebinding Attacks: Exploits browser loopholes to access internal MCP servers via SSE (Server-Sent Events), even if they’re only available on

localhost.

These techniques expose a dangerous reality: AI agents can be manipulated via tool definitions and context injection to perform unauthorized actions or data leaks.

Recommendations for Organizations

To mitigate the risks posed by EchoLeak and similar vulnerabilities, organizations should:

- Apply all June 2025 Microsoft security patches.

- Enforce context isolation when using AI systems.

- Restrict what AI agents can access in internal tools like SharePoint, Teams, or GitHub.

- Validate the

Originheader on all MCP server requests. - Transition from SSE to more secure alternatives like Streamable HTTP.

Final Thoughts

EchoLeak is more than a vulnerability—it’s a wake-up call for companies that rely on AI to automate knowledge work. It highlights how trust boundaries in AI must be explicitly defined to avoid silent data leaks and systemic abuse.

AI assistants are powerful, but without security-by-design, they can become liabilities instead of assets.

Sources

- The Hacker News. (2025, June 12). Zero-Click AI Vulnerability Exposes Microsoft 365 Copilot Data Without User Interaction. https://thehackernews.com/

- CVE Details. CVE-2025-32711 – Microsoft 365 Copilot AI Prompt Injection Scope Violation. https://www.cvedetails.com/

- Aim Security Blog. EchoLeak: LLM Scope Violation and AI Command Injection in M365 Copilot. https://www.aimsecurity.ai/blog

- CyberArk Labs. Tool Poisoning Attacks and Full-Schema Exploits in AI Agents. https://www.cyberark.com/resources

- GitHub Security Blog. DNS Rebinding Risks in AI Assistant Architectures Using SSE. https://github.blog/

Español

Español